Precision, on the other hand, is a measure of variation. If you aimed to type 30, but typed at 34,36,35,35,35, that's an average of 35, not accurate. However, your results didn't vary by a large margin.

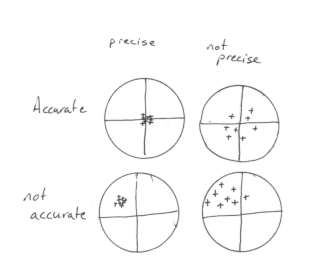

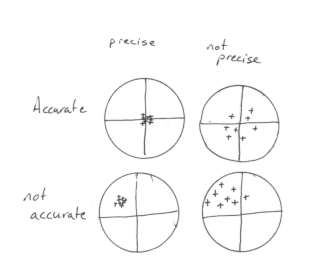

To show in a picture, a common picture, explainging this topic, imagine you're an archer. The following results at aiming for the bullseye are show with labels:

Overall, precision is often perfered to accuracy when the offset can be compensated. If a digital sample was being taken, software could compensate for the offset to have an accurate and precise result. However, is systems that compensation is difficult, accuracy may be perfered, as simple filtering techniques can be used to average the results. In such a case, both precision and accuracy can be gained at the loss of being able to perceive rapid changes.